Researchers using surveys to gather data have their work cut out for them. Not only do they have to determine whether to conduct surveys in person, by phone or mail, or online, they have to decide which types of survey scales to use. That’s not all; it’s also important that researchers actively work to avoid survey bias. At best, a biased survey can produce useless data; at worst, it can result in a company fixing a problem that never really existed or spending millions on new product development when there are actually no real buyers in the marketplace. So, let’s take a look at the different types of survey bias and how to avoid them.

Create your perfect survey, form, or poll now!

What Does It Mean to Be Biased?

Before we dive into survey bias, let’s look at the word itself. Now, we know most of our readers understand what it means to be biased. But, it’s important that we’re all on the same page before continuing. A bias is a personal and sometimes unreasonable judgment for or against someone or something. If someone is biased, they prefer a group of people, an idea, or a thing over another, often due to personal and unreasoned judgment, and behave unfairly as a result.

It’s important to understand that this is different from an opinion; obviously, we all have those, and as researchers, that’s what we’re trying to extract from people! An opinion is different from bias because to form them, people look at both sides of the coin and give their honest feedback, free from malicious intent or vested interests.

5 Different Types of Survey Bias

So what is a biased survey? When we apply the concept of bias to surveys, bias becomes a “systematic error introduced into sampling or testing by selecting or encouraging one outcome or answer over others.” (Thanks, Webster). Here are the five different biases that can be encountered in surveys.

What is Non-Response Bias?

Sometimes, people simply won’t participate in a survey. Nonresponse bias occurs when prospective participants walk away, hang up the phone, throw out the mail, or delete the email; and their reasons for doing so can vary greatly. Now, when a good portion of survey takers don’t respond, and their answers may have been very different from those who did respond, it can create misleading conclusions, otherwise known as non-response bias.

Here’s an example of a non-response bias from the corporate world. Say you conduct a survey in a big company and ask questions about employee workload. Managers who are juggling a lot of projects may be too busy to answer, while those who aren’t struggling will take the time to respond and report that their workload is fine. The results of the survey will only reflect those who answered with light workloads, giving the impression that all employees are doing okay, when in fact, many are overworked.

Non-response bias analysis can be useful in these situations. One technique researchers use to account for those who did not respond is to analyze the answers of participants who have responded last. Studies suggest that people who put off answering surveys often have views that reflect those who did not respond at all.

What is Response Bias?

Response bias isn’t so much a problem of getting people to answer the questions; rather, it’s a problem with the questions themselves. Both subconscious and conscious factors can result in less-than-truthful responses, so it’s important to word all questions carefully. We cover six examples of what we call “bias survey questions” or “bias-inducing questions” later on that can lead to response biases, along with how to minimize response bias by reframing questions.

What is Confirmation Bias?

The “big bad” of survey biases, this happens on the backend on the part of the researcher, not in the survey design itself, which makes it more difficult to rectify. Confirmation bias comes into play when researchers evaluating survey data look for patterns that prove a point that they think exists or proves something that they believe to be true, overlooking data that says otherwise. When this happens, a misrepresented conclusion is reported back to the interested parties.

Here’s an example of confirmation bias: an app developer for a bank asks survey participants if they are using the app. The response is an overwhelming “yes,” and because the developer has a vested interest in the success of the app, he reports back that people love the app. However, an answer of “yes” in this instance doesn’t mean they like it—simply that they use it. They may be begrudgingly using it because there are no other options, they may be using it but hate it, or they may simply be trying to please the researcher.

Confirmation bias is a big reason why there are many proponents of surveying for quantitative data, such as using a multiple-choice questionnaire; this way, you are confident of the answers each respondent is selecting.

What is Social Desirability Bias?

Our ideas about what others think of us hinge on what psychologists call our self-concept—our own beliefs about who we are. This means that we often present our actions and words in a way that makes us look good to others, even though they may be inaccurate. This is so deep-rooted in our behavior that we usually think of those who don’t follow these norms as anti-social.

Here’s an example using an app again. Say a researcher is questioning an IT executive who thinks of themselves as a leader in the industry. If the executive is having difficulty using the app, they may not admit it, as this would challenge their self-concept. So, a skilled researcher would take steps to reframe the question. They may ask, “If you could design this better for a Luddite, what would you do differently?” This way, the executive can speak about their issues with the app in regards to someone who doesn’t understand technology, while keeping their self-concept intact.

What is Sampling Bias?

Also known as selection bias, sampling bias occurs when researchers fail to choose participants properly. Ideally, research participants are chosen at random, while still matching the study’s criteria. If participants aren’t randomly selected, the survey’s validity can be seriously impacted because it doesn’t accurately reflect the greater population. Here’s a great example of sampling bias.

It’s 1948. Harry S. Truman and Thomas E. Dewey are neck and neck in the Presidential race. To predict the winner, a nationwide political telephone survey is conducted, and the results heavily favor Dewey. It’s by such a wide margin that a confident Chicago Tribune prints their newspaper with the headline “Dewey Defeats Truman” before the final tally is revealed, resulting in this famous photo of a triumphant Truman, who ultimately wins, holding up the paper with a big grin on his face.

So what happened? Well, in 1948, only wealthy families could afford to own telephones, and the upper-classes heavily favored Dewey; meanwhile, lower and middle-class families, who didn’t own telephones, backed Truman. The researchers failed to consider that by sampling only by phone, they weren’t getting an accurate sampling of the total population.

Bias Survey Question Examples

A bias questionnaire generally falls within six categories. Here’s a look at all of them with an example of each.

1. Leading Questions

A leading question “leads” the respondent toward a “correct” answer by wording questions in a way that sways readers to one side. For example, a survey may ask, “How dumb are the President’s policies?”, which puts it into the respondent’s head that, yes, the policies are dumb. A non-leading question would be, “What do you think of the President’s policies?”

Leading questions can also happen on scaled questions. For example, a satisfaction survey could ask participants if they were extremely satisfied, satisfied, or dissatisfied. By providing more options on the satisfied side, the question is biased and leading participants in that direction.

Read more about leading questions in our blog, 5 Types of Leading Questions with Examples + How They Differ From Loaded Questions.

2. Loaded Questions

These questions more or less force respondents to answer a question in a particular way. For example: “Where do you like to go on weekends?” Well, what if a respondent likes to stay home on the weekend? A better, unloaded question would be, “What do you like to do on weekends?” You can read more in our blog on leading and loaded questions.

3. Double-Barreled Questions

Researchers sometimes make the mistake of putting two questions in one. For example, “Do you like your boss and his policies?” This poses a problem if a respondent likes their boss as a person, but does not like his policies. Instead, the question should be broken into two separate questions.

4. Absolute Questions

Otherwise known as the “yes or no” question. For example, if you ask “do you always brush your teeth before bed,” you’re backing respondents into a corner. While most may brush before bed, certainly there are times they forget or are too tired, so they may answer negatively even though 9 out of 10 times the answer is yes. A likert scale with options can solve this issue.

5. Unclear Questions

Clarity is key when developing surveys, so avoid overly technical terminology, jargon, abbreviations, or acronyms that people may not understand. There are also times when you don’t want to be too specific; for example, if you’re conducting a survey about smartphone use, don’t use “iPhone” in your questions just because they may be the most popular.

6. Multiple Answer Questions

Multiple-choice questions are a great survey method, but researchers need to be sure that there are not multiple answers (unless they want the participants to select all that apply). For example, if you ask someone how often they work remotely and provide the options “1-3 days per week” and “3-5 days per week” the person who works remotely three days per week could select either option which skews results.

5 More Ways to Avoid Survey Bias

Is it possible to make a survey without bias? Of course! Being aware of the types of biases and watching your wording as described in the sections above helps to eliminate many errors, but there are a few other things to remember when designing your surveys.

1. Include “Prefer Not to Answer”

Some questions may not be applicable to someone, or they may be uncomfortable with a particular question, thus answering dishonestly or dropping out of the survey altogether. So, always include the option of “prefer not to answer.”

2. Include All Possible Answers

Forgetting possible answers leads to bias, so include all options or a catchall “other” option.

3: Give Participants Motivation

To lower non-response rates, it’s popular amongst survey-takers to include a participation incentive, such as a money offer, a discount code, or exclusive access to your premium services upon completion of the survey.

Pro Tip: Be sure that what you offer does not affect the type of people who respond; you should know your target group and provide them with incentives that are useful for all of them.

4. End with Demographic and Personal Questions

People may drop out of a survey if questions get too personal at the beginning; save these questions for the end to obtain as much data as possible.

5. Resurvey Non-Respondents

Someone may be interested in the survey, just not at the moment they receive it—and then they forget to go back to it. A follow-up survey or reminder often gets non-respondents to take action.

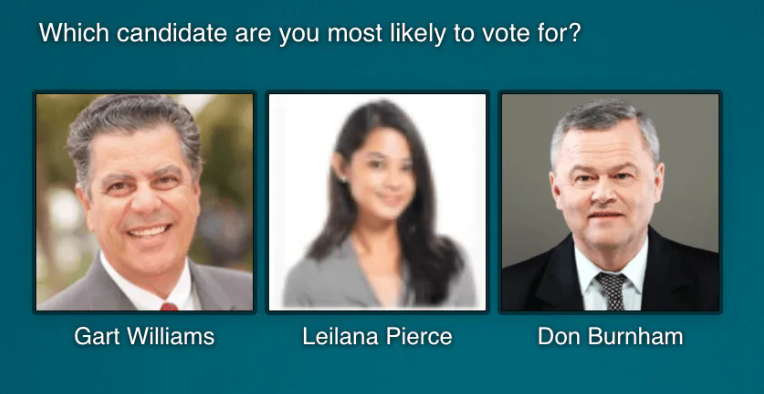

Survey Bias on Surveys with Images

While text is often the main culprit when it comes to creating survey bias, it can also happen with imagery. For example, if you’re conducting an election survey and some of the candidates faces are blurry, it can lead people to select photos with clearer imagery. So, when using a picture survey or picture poll, remember the following:

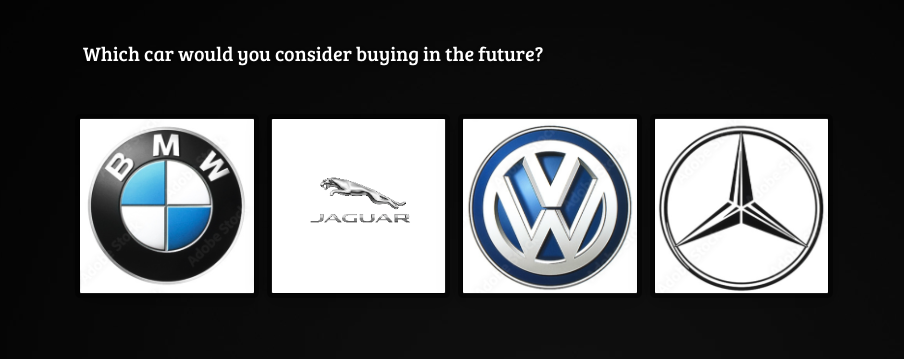

1. Picture Size

Be sure the subject of the photos are always similar in size to be fair and impartial. In the example below, Jaguar is clearly at a disadvantage as the auto manufacturer’s logo is much smaller compared to the competition, which could have an impact on respondents’ image selection.

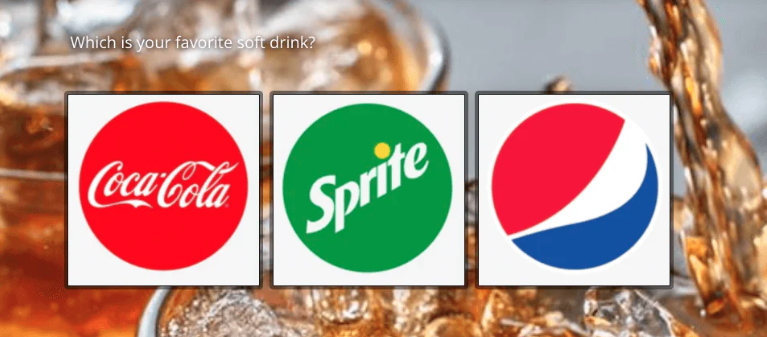

2. Background Image

In the below soda image survey example, a background with a brown cola image has been chosen. This could subconsciously lead someone to select a brown-colored soda instead of the clear soda, Sprite.

3. Picture Quality

Each image selection should be of high quality to give impartiality to each. As you can see in candidate “Leilana” is unfairly represented with a low-resolution photo compared to the other runners, which could impact people’s selection process.

4. Picture Choice

Choosing a photo that’s not flattering or not representative of the subject on any given day can create bias. In the example below, New York and Venice are photographed on a beautiful day, while Shanghai is photographed during an unusually smoggy day.

Read more about how to avoid survey bias when using image surveys in our blog 5 Things to Avoid When Creating Image-Based Surveys.

Ready to Create Your Survey?

Now that you understand the types of survey biases that exist (in both text and visual surveys) and how to control bias to get non-biased opinion, you’re ready to go. With SurveyLegend, there are many benefits:

- When it comes to surveys, brief is best, so SurveyLegend automatically collects some data, such as the participant’s location, reducing the number of questions.

- People like eye candy and many surveys are just plain dull. SurveyLegend offers pre-designed surveys that will get your participant’s attention!

- Today, most surveys are taken on mobile devices, and often surveys created on desktop computers don’t translate well, resulting in a high drop-off rate. SurveyLegend’s designs are responsive, automatically adjusting to any screen size.

What are you waiting for? You can get started with your online survey using SurveyLegend for free right now. Have comments or questions? We’re here for you.

A personal and sometimes unreasonable judgment for or against someone or something. If someone is biased, they prefer a group of people, an idea, or a thing over another, often due to personal and unreasoned judgment, and behave unfairly as a result.

Non-response bias, response bias, confirmation bias, social desirability bias, and sampling bias.

Boas surveys may be based on various types of questions, such as those that are leading, loaded, double-barreled, absolute, or unclear.