Survey validity and reliability are essential for producing accurate, trustworthy research results. Validity ensures that survey questions truly measure what they intend to—capturing the right construct through clear, unbiased wording and sound design. Reliability, on the other hand, measures consistency—whether repeated surveys would yield the same results under similar conditions. A valid survey might not always be reliable, and vice versa, but both are crucial for quality data. By focusing on test-retest reliability, internal consistency, sampling, question design, and statistical rigor, researchers can create surveys that are both valid and reliable, ensuring dependable insights for data-driven decision-making.

A conclusion is only as good as the data that informs it. Researchers understand this, so those conducting surveys strive to obtain the most accurate results. This means survey data should be both reliable and valid. But aren’t those two terms the same? While often used interchangeably, survey reliability and survey validity are two different things. In this blog, we’ll look at each so that you can create surveys that tick both boxes.

Create Your FREE Survey, Poll, or Questionnaire now!

The Difference Between Survey Reliability and Survey Validity

While survey reliability and validity are closely linked, each plays a different role when it comes to survey results. Both are concerned with accurately measuring the intended constructs. Validity is about question accuracy. It looks at how well your survey conveys its questions (and possibly answers, if they are provided). Validity asks, will participants understand exactly what you’re surveying them about? Sometimes, questions may inadvertently end up measuring reading comprehension rather than the intended construct, which can affect the validity of your results.

Reliability, on the other hand, looks at whether your survey questions would consistently result in the same responses from participants if the survey were repeated in the same situation. How likely are survey participants to respond the same way if they retook the survey later on? In test-retest reliability, this involves comparing responses from the initial survey to those from a second administration to assess the stability of your measuring methods over time.

Example of Survey Reliability vs. Survey Validity

The best way to highlight the difference between survey reliability and validity is with an example. Here’s a question on a survey for students created using SurveyLegend. In addition to student engagement, other examples include an employee engagement survey, where organizations use similar questions to measure how engaged employees feel at work.

Create Your FREE Survey, Poll, or Questionnaire now!

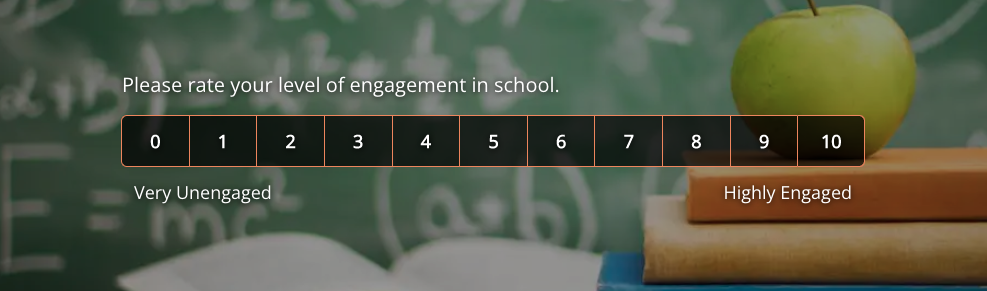

To determine validity, ask yourself: Is my question going to measure what is supposed to be measured? In the example above, the question uses a rating scale (0–10) as a test measure intended to assess the specific construct of student engagement. However, it’s not necessarily clear what engages the student. For example, a student could rate their level of engagement based on all factors about school (classes, teachers, socializing with friends, extracurricular activities). Or, they might respond based on their studies only, or a particular class in which the survey was conducted. A simple rewording could ensure understanding of the question’s intent.

To determine reliability, ask yourself: Will this question consistently elicit the same type of response? A student in a bad mood may respond “0” or very unengaged one day, and a week later, now in a better mood, respond with a “10,” or highly engaged. That makes responses pretty unreliable. Rewording the question to give a time frame (e.g., today, this week, this year) could help to get better reliability.

As you can see, while survey validity and survey reliability are closely related, they are not the same. A valid survey doesn’t necessarily equate to a reliable survey, and vice versa.

Survey Design and Critical Factors

Survey design is an essential aspect of collecting accurate and meaningful survey data. The way a survey is structured can significantly impact both the reliability and validity of the results. When designing a survey, researchers must pay close attention to several factors that can influence the quality of the data collected.

One of the most critical factors is the wording of survey questions. Clear, concise, and unbiased questions help ensure that respondents interpret them in the intended way, which is vital for both validity and reliability. Ambiguous or leading questions can introduce measurement error, making it difficult to obtain valid survey results. Additionally, the choice of response options—such as rating scales, multiple-choice answers, or open-ended responses—can impact the accuracy with which the survey measures its intended outcomes.

Another important consideration is the method of data collection. Whether a survey is administered online, in person, or by phone, each approach can introduce different types of bias or error. Consistency in survey administration helps improve reliability, as it reduces the likelihood of random errors or external factors influencing the results.

A well-designed survey also takes into account the order and flow of questions, ensuring that earlier questions do not influence responses to later ones. This attention to survey design helps produce reliable survey data that can be trusted for decision-making.

Ultimately, by focusing on these critical factors—question wording, response options, and data collection methods—researchers can create surveys that yield both reliable and valid data. Thoughtful survey design is the foundation for obtaining accurate results and making informed decisions based on survey research.

Create Your FREE Survey, Poll, or Questionnaire now!

7 Types of Survey Validity

There are a couple of different factors to analyze when it comes to the validity and reliability of a survey. Validity is evaluated using various statistical methods and tests, which help determine whether research instruments accurately measure what they are intended to measure. When evaluating validity, it is important to consider that survey items can be divided into three categories: attitudinal, behavioral, and demographic, as each category can impact the validity of responses differently. Here are seven types of survey validity methods.

1. External Validity

This method of validity assesses sampling and looks for sampling errors. To be valid, a sample must be representative of the greater population. In our example, let’s say the population is all senior students at a particular school. Let’s say there are 300 seniors. A 10% sample size of 30 would be acceptable.

However, what if the researcher simply surveyed one class of 30 students? The class could be one that most students dislike, so the majority may feel unengaged at the time. Or, it could be an AP (advanced placement) class with high achievers who are likely to be very engaged. Either way, the results are skewed. The research would be better off with a random sampling of 30 students to improve representativeness.

2. Internal Validity

This validity test determines whether the survey questions actually explain the outcome that is being researched. In our student engagement example, the researcher needs to ask questions that help identify factors that influence engagement. To ensure internal validity, these questions should be designed to measure the same construct, capturing the underlying concept of engagement. Internal validity also ensures that the survey is measuring the true value of the intended variable, not just providing consistent or reliable results. So, you would want to focus on the relationship between independent variables (e.g., grade point average, participation in extracurricular activities, level of truancy, disciplinary action taken, and so on) and the dependent variable (e.g., likelihood of engagement).

3. Statistical Validity

This type of survey validity refers to representativeness. In the student survey, for example, let’s say you conclude that the average level of engagement is a 7. But how many students responded? If there are 300 students, and only 10 responded, statistical validity is poor. To improve statistical validity, more students must participate.

Additionally, estimating reliability and obtaining reliability estimates are crucial for ensuring that survey results are statistically valid, as they help assess the consistency of responses. Using different versions of a survey can also impact statistical validity, so it is important to consider how these versions may affect the reliability and interpretation of the results.

4. Construct Validity

This looks at survey design, e.g., the wording of the questions and the flow of questions. It also seeks to eliminate survey bias and leading or loaded questions, the former of which, intentionally or unintentionally, persuades the participant to answer in a particular way, and the latter, which asks multiple questions within one question. Additionally, questions should not be based solely on one perspective or assumption, as this can compromise the validity of the responses.

Create Your FREE Survey, Poll, or Questionnaire now!

5. Face Validity

As the name implies, this form of validity takes your questions at face value: Do the items in the survey look like they’ll measure what they’re intended to measure? No mathematical formulas, no in-depth hypothesizing, just a good old-fashioned judgment call. If a researcher doesn’t trust their judgment or wants a second opinion, a survey expert may be asked to conduct a face validity check. Survey questions can also be tested by experts to ensure they appear to measure the intended construct.

6. Content Validity

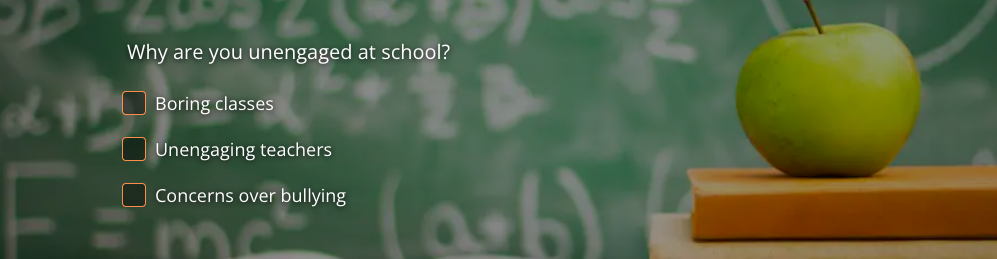

Content validity helps to ensure you get the full picture when it comes to respondent answers. Consider a follow-up question to the previous student engagement survey. If the student selects 5 or below, considered unengaged or very unengaged, using survey logic, the questionnaire leads to this question:

See a problem? The researcher may not get a clear picture of student engagement as it leaves out other possibilities. For example, “personal issues” or “home life” could be leading to disengagement. Or, perhaps the classes are “too easy” or “too hard” rather than just “boring.” It’s always a good idea to think of all potential answers, and at the very least, consider an “other” bucket.

7. Criterion Validity

This form of validity looks at whether answers fit with behaviors. In our example, let’s say a student says they are highly engaged. The researcher then looks at the student’s history and sees that the student has straight As and is involved in athletics and the drama club. This is evidence of criterion validity, as performing well in studies and participating in extracurricular activities would fit with high levels of engagement. If criterion validity doesn’t exist, the researcher then looks for the possible disconnect.

Create Your FREE Survey, Poll, or Questionnaire now!

2 Types of Survey Reliability

Reliability refers to the consistency of survey results across repeated measurements or administrations. High reliability is achieved when surveys produce the same results or the same result over repeated administrations, indicating that the measurement tool is dependable. Poor reliability or low reliability, on the other hand, can undermine the usefulness of survey data by producing inconsistent or variable outcomes. High test-retest reliability specifically indicates strong consistency of survey responses over time. Internal consistency is another key aspect of reliable surveys, reflecting the degree to which items within a survey measure the same construct. Interrater reliability is important in qualitative surveys, ensuring agreement among different raters or coders. It is important to note that low validity can still occur even if reliability is high, meaning that a survey may consistently measure something, but not necessarily what it is intended to measure. Some models for assessing reliability, such as the multi-trait multi-method (MTMM) model and the true score model, consider two factors: the construct being measured and the method of measurement. Focused on survey consistency over time, there are just two types of survey reliability.

1. Test-Retest Reliability

To determine if a survey is reliable, researchers need to consider test-retest reliability. In other words, if 100 students today say they are highly engaged, and they were surveyed again in a month during a second administration, would the results be similar? The second administration of the survey allows researchers to compare responses and assess the reliability or stability of the measurement over time. Sure, a student may pick up or lose interest in their studies or activities over time, but for the most part, it is reasonable to expect most students’ engagement to be steady.

2. Internal Reliability

For internal reliability, once again looking at our example, researchers need to examine whether students who tend to be disengaged also tend to be negative in response to questions about classes, teachers, and activities. The research would also look to see if highly engaged students are generally positive about some of all of these items. Internal consistency refers to the degree to which related survey items produce similar responses, indicating that the items are measuring the same underlying concept.

Conclusion

To be sure a survey provides accurate data, researchers should always consider both survey reliability and survey validity. Sometimes, hard analysis is needed; other times, an educated guess is acceptable. It’s all up to how valid and reliable you want your data to be. Ready to start surveying? SurveyLegend has you covered. Our online surveys are easy to use, not to mention easy on the eyes, with the ability to include survey images and picture questions. Begin your survey journey with SurveyLegend today!

Are you concerned with survey reliability and validity? How do you ensure your data is accurate? Properly citing surveys is also important for research credibility—learn how to cite a survey in different citation styles. We’d love to hear from you in the comments!

Create Your FREE Survey, Poll, or Questionnaire now!

Frequently Asked Questions (FAQs)

What is survey validity?

Survey validity seeks to ensure that survey questions and answers will convey the right information to survey participants.

What is survey reliability?

Survey reliability ensures that survey questions consistently receive the same answers.

Can you have reliability without validity?

While reliability and validity are closely linked, they are independent of each other. Data may be reliable but not valid, and vice versa. For example, a poorly worded survey may be reliable but not valid (participants consistently answer questions, but they are poorly worded and therefore collect bad data), and vice versa.

Are surveys reliable?

Reliability in surveys is closely tied to ethical standards. To learn more, see 10 Survey Research Ethics Considerations.

Surveys are reliable when it can be expected that, over time, participants will answer in the same manner. Of course, things can change (attitudes, moods, impressions, and so on), which may require a change of questions.

What are the seven types of validity in surveys?

External validity, internal validity, statistical validity, construct validity, face validity, content validity, and criterion validity.